DiffuseBot Improves Design of Soft Robots with Physics-Augmented Generative Diffusion Models

DiffuseBot Improved Design of Soft Robots with Physics-Augmented Generative Diffusion Models

Designing soft robot systems required considering trade-offs in system geometry, components, and behavior, which was challenging and time-consuming.

Tsun-Hsuan Wang, Pingchuan Ma, Yilun Du, Andrew Spielberg, Joshua B. Tenenbaum, Chuang Gan and Daniela Rus, from MIT, reported their contribution in Generative Diffusion Models based DiffuseBot that solved these problems.

DiffuseBot was a framework that addressed the challenge of co-designing soft robot morphology and control for various tasks. The goal of DiffuseBot was to leverage the power of diffusion models in generating optimal robot designs and to utilize pre-trained 3D generative models. This framework combined physics-based simulation with diffusion processes to create soft robot designs that excelled in a wide range of tasks. DiffuseBot introduced a novel approach that combined generative diffusion models with physics-based simulation to automate and accelerate the co-design process. By augmenting diffusion-based synthesis with physical dynamical simulation, DiffuseBot generated soft robot morphologies that were optimized for various tasks, including locomotion, manipulation, and passive dynamics. This approach allowed for the automated creation of diverse and performant robot designs. DiffuseBot filled the gap between the diverse designs found in nature and the reiterative nature of modern soft robotics, providing a framework for efficient automatic content creation in the field.

How they developed the DiffuseBot

Soft robot co-design referred to the joint optimization of the shape and control of soft robots. This involved finding the best geometry, stiffness, and actuator placement for the robot, as well as determining the appropriate control signals for the actuators. The goal was to minimize a loss function that took into account the robot morphology and control parameters. Co-design optimization was challenging due to the complex relationships between body and control variables, competing design modifications, and trade-offs between flexibility and effectiveness. They first set their aims to leverage diffusion models to search for optimal robot designs by generating diverse robots and utilizing the structural biases in pre-trained 3D generative models.

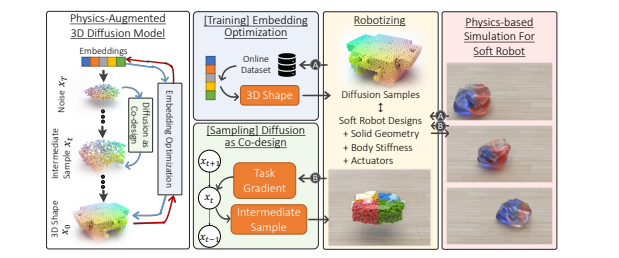

They explained the process of robotizing the diffusion samples obtained from the Point-E diffusion model. The diffusion samples were in the form of surface point clouds and could not be directly used as robots in the physics-based simulation. They proposed a method called “Embedding Optimization” to convert the diffusion samples into robot geometries that could be evaluated in the simulation. The process involved several steps. First, they generated data using the diffusion model and evaluated the samples using a physics-based simulation. The results were then aggregated and used to optimize the embedding of the diffusion samples. They used a Material Point Method (MPM)-based simulation that required solid geometries as inputs. This presented two challenges: converting surface point clouds into solid geometries and dealing with the unstructuredness of the diffusion samples at intermediate steps. To address these challenges, they used a denoising diffusion implicit model (DDIM) to predict clean samples at each diffusion time step. The clean samples were then used to construct better-structured data for simulation. The predicted surface points were converted into solid geometries by reconstructing a surface mesh and sampling a solid point cloud within its interior. They modified the optimization approach from Shape As Points to achieve differentiable Poisson surface reconstruction. To integrate the solidification process into the diffusion samplers, they computed the gradient of the solid geometry with respect to the predicted surface points using Gaussian kernels. This gradient controlled how each solid point was influenced by the predicted surface points near it. They also defined actuator placement and stiffness parameterization for the robot based on the solid geometry. Actuators were embedded as muscle fibers, and stiffness was assumed to be constant for simplicity.

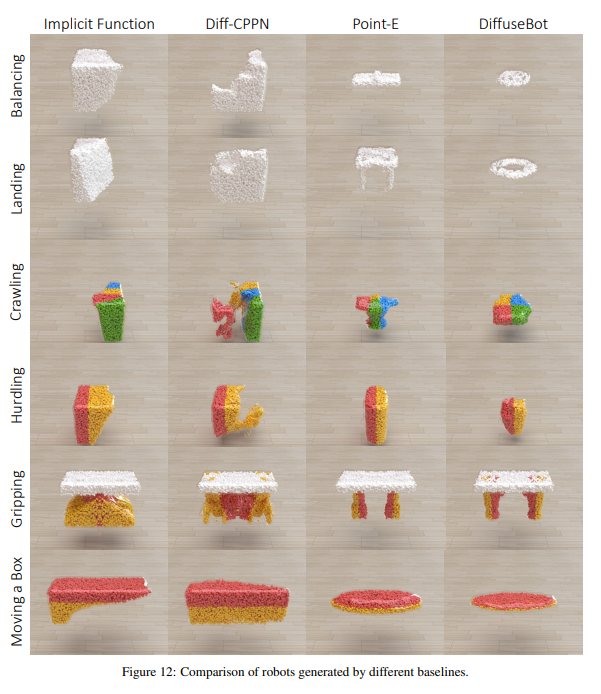

They proposed a Physics Augmented Diffusion Model (PADM) for improving robotic performance through optimization and co-design. The PADM consisted of two main steps: embedding optimization and diffusion as co-design. In the embedding optimization step, new data was actively generated from the diffusion model and stored in a buffer. Physics-based simulation was used to evaluate the performance of the generated data. The embeddings were then optimized conditioned on the diffusion model and a skewed data distribution. This approach eliminated the need for manual effort in curating a training dataset and avoided the risk of deteriorating the overall generation. It also saved the cost of storing model weights for each new task. The diffusion as co-design step further improved the performance of individual samples by reformulating the diffusion sampling process into a co-design optimization. This was achieved by incorporating gradient-based optimization techniques and Markov Chain Monte Carlo (MCMC) sampling. The diffusion time was converted into a robot design variable, and the control variable was optimized to adapt to the design. This process was performed periodically during the diffusion steps. They provided experimental results that demonstrated the improvement in physical utility by augmenting physical simulation with diffusion models. The results showed improved performance in passive dynamics tasks (balancing, landing), locomotion tasks (crawling, hurdling), and manipulation tasks (gripping, moving a box).

Was the DiffuseBot really useful?

They conducted experiments and analysis to evaluate the effectiveness of the proposed method. They compared their approach, DiffuseBot, with different baselines and performed ablation studies to analyze the impact of various components of the method. They first presented a table, which showed the results of incorporating embedding optimization and diffusion as co-design for improving physical utility. They drew 100 samples with preset random seeds for each entry to ensure valid comparisons. The results demonstrated that the performance increased across all tasks when using the proposed techniques. However, they noted that the sample-level performance did not always monotonically improve due to the stochasticity in the diffusion process and the low quality of gradient from differentiable simulation in certain scenarios. Next, they compared DiffuseBot with various baselines commonly used in soft robot co-design. They ran each baseline method with 20 different random initializations and chose the best one, while for DiffuseBot, they drew 20 samples and reported the best. The results showed that DiffuseBot outperformed all baseline methods. They then showcased examples of soft robots generated by DiffuseBot in figures. These robots demonstrated the diversity and flexibility of DiffuseBot in generating designs for different tasks. They also showed how the robots evolved from initial designs to improved ones that aligned with the task objective. In the ablation analysis, they conducted experiments with the crawling task to gain insights into the proposed method. They compared different approaches for embedding optimization and found that manually designing text prompts was not effective for functional robot design. Finetuning the diffusion model itself also did not yield better performance. They also investigated the impact of applying diffusion as co-design at different steps and found a sweet spot for when to start applying it. In addition, they compared post-diffusion co-design optimization with their method and showed that their method performed slightly better. They highlighted the flexibility of diffusion models in incorporating human feedback. They demonstrated the ability to composite different data distributions, including human textual feedback, into the diffusion as co-design framework. Finally, they fabricated a physical robot for the gripping task to demonstrate the real-world applicability of their method. They used a 3D Carbon printer to reconstruct the generated design and employed tendon transmission for actuators. They noted that physical robot fabrication and real-world transfer posed challenges and this experiment only served as a proof-of-concept. Overall, the experiments and analysis supported the effectiveness and flexibility of DiffuseBot in generating soft robot designs for different tasks.