All-analog photoelectronic chip for high-speed vision tasks

All-analog photoelectronic chip for high-speed vision tasks

Researcher from Tsinghua University recently reported their findings on vision chip design (DOI:10.1038/s41586-023-06558-8).

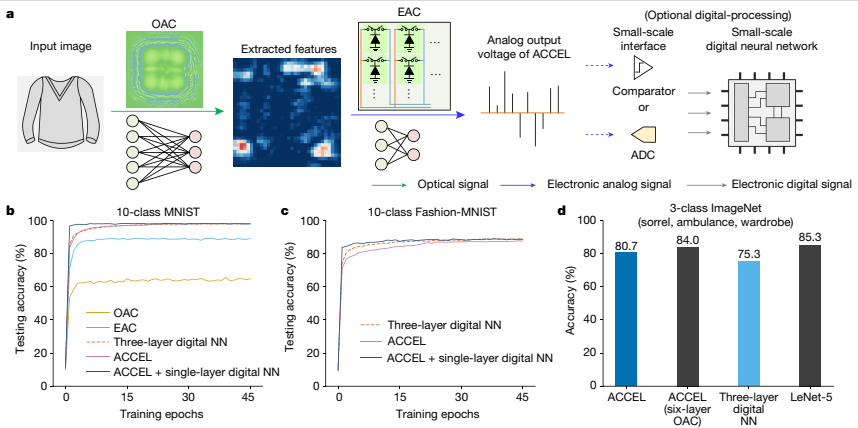

Researchers have proposed an all-analog chip called all-analog chip combining electronic and light computing (ACCEL) that combines electronics and light computing for high-speed vision tasks. ACCEL fuses diffractive optical analog computing (OAC) and electronic analog computing (EAC) in one chip to achieve a computing speed of 4.6 peta-operations per second, which is over one order of magnitude higher than state-of-the-art computing processors. The chip utilizes diffractive optical computing for feature extraction and directly uses light-induced photocurrents for further calculations, eliminating the need for analog-to-digital converters and achieving low computing latency of 72 ns per frame. ACCEL demonstrates competitive classification accuracies for various tasks and shows superior system robustness in low-light conditions. Potential applications of ACCEL include wearable devices, autonomous driving, and industrial inspections. The ACCEL chip has a systemic energy efficiency of 74.8 peta-operations per second per watt and achieves a computing speed of 4.6 peta-operations per second, which is three and one order of magnitude higher than state-of-the-art computing chips, respectively. The chip combines the advantages of photonic and electronic computing, resulting in high-speed and power-efficient vision tasks. It enables direct processing of incoherent or partially coherent light, reducing power consumption and improving processing speed without the need for additional sensors or light sources. ACCEL demonstrates high-speed recognition with experimental classification accuracy of 85% over 100 testing samples for video judgement tasks.ACCEL achieves competitive classification accuracies of 85.5%, 82.0%, and 92.6% for Fashion-MNIST, 3-class ImageNet classification, and time-lapse video recognition tasks, respectively. It also shows excellent performance in low-light conditions, preserving features better than digital neural networks (NNs) when light intensity is reduced. The chip’s partial reconfigurability enables comparable performance on different tasks even with a fixed diffractive optical computing module, demonstrating its flexibility and adaptability.ACCEL has broad practical applications in wearable devices, robotics, autonomous driving, industrial inspections, and medical diagnosis.

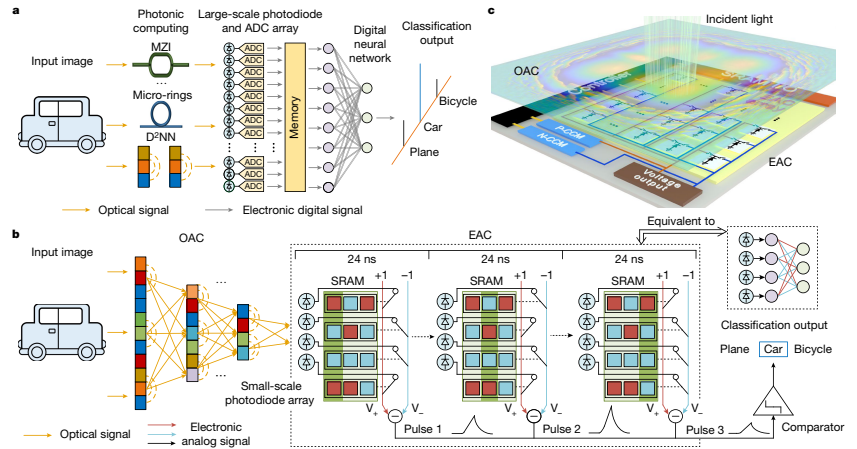

There are challenges in vision tasks that require converting optical signals to digital signals for post-processing. This conversion process involves the use of large-scale photodiodes and power-hungry analog-to-digital converters (ADCs). Implementing precise optical nonlinearity and memory can also add latency and increase power consumption at the system level.To address these challenges, the researchers propose an optoelectronic hybrid architecture that reduces the need for massive ADCs, enabling high-speed and power-efficient vision tasks without compromising task performance. This architecture utilizes an all-analog approach and encodes information into light fields by illuminating targets with either coherent or incoherent light.The key component in this architecture is the ACCEL (All-analog Convolutional Event-driven Learning) module, which is placed at the image plane of a common imaging system for direct image processing and classification. The ACCEL module consists of two parts: the Optical Analog Computing (OAC) module and the Electronic Analog Computing (EAC) module.The OAC module is a multi-layer diffractive optical computing module that operates at the speed of light. It extracts features from high-resolution images using phase masks trained to process the data encoded in light fields through dot product operations and light diffraction. This allows for dimension reduction of the data without the need for optoelectronic conversion.The extracted features encoded in light fields from the OAC module are then connected to the EAC module. The EAC module consists of a photodiode array, which converts the optical signals into analog electronic signals based on the photoelectric effect. Each photodiode is connected to either the positive or negative line based on the weights stored in static random-access memory (SRAM). The generated photocurrents are summed up on both lines, and an analog subtractor calculates the differential voltage as the output.The EAC module acts as a nonlinear activation function and is equivalent to a binary-weighted fully connected neural network (NN). The output of the EAC module can be used directly as predicted labels for classification or as inputs for another digital NN. For all-analog computation, the number of output pulses (Ntt) corresponds to the number of output nodes in the binary NN, which can be set according to the desired classification categories.The ACCEL module, with a single EAC core, works sequentially by outputting multiple pulses corresponding to the Ntt output nodes of the binary NN in the EAC module. All of these functions can be integrated onto a single chip in an all-analog manner, making it suitable for various applications and compatible with existing digital NNs for more complex tasks.

They introduced an optical encoder and describe how weights in phase masks can be trained using numerical beam propagations based on Rayleigh-Sommerfeld diffraction theory. They use a three-layer digital neural network to reconstruct images from the MNIST dataset with only 2% sampling, demonstrating the data compression capability of the optical encoder. Additionally, they show that when the output of the optical encoder is used in conjunction with a digital neural network for classification, the same accuracy can be achieved with significantly reduced samplings. This means that the number of analog-to-digital converters (ADCs) can be reduced by 98% without compromising accuracy. However, they note that more complex tasks or connections to simpler networks may lower the compression rate and require a higher dimensional feature space.

they also introduced the architecture of the electronic analog computer (EAC) they used, which consists of 32x32 pixel circuits. These circuits form a calculation matrix of size 1,024xNoutput, where Noutput represents the number of output nodes. In their fabricated chip, Noutput has a maximum value of 16. Each pixel circuit includes a photodiode that generates a photocurrent (Iph,i) used for analog computing. It also contains three switches and one SRAM (static random access memory) macro to store the binary network weights (wij). The cathode of the photodiode is connected either to the positive computing line (V+) or the negative computing line (V-) for each output node, depending on the value of the weight. The on-chip controller writes the trained weights to the SRAM macro before performing inference. During operation, the accumulated photocurrents, along with their corresponding weights, discharge the computing lines. This leads to a voltage-drop, which is used for further processing in the EAC.

They reported the classification accuracies achieved by ACCEL in various tasks. Numerical simulations demonstrated competitive classification accuracies for different datasets, including 10-class MNIST, 10-class Fashion-MNIST, and 3-class ImageNet. ACCEL outperformed both EAC-only and OAC-only methods in terms of classification accuracy, showcasing its superior performance in vision tasks. Optional small-scale digital neural networks (NN) can be connected to ACCEL for more complex tasks or time-lapse applications at a low cost. They also reported the low computing latency and robustness of ACCEL in low-light conditions. After applying diffractive optical computing as an optical encoder for feature extraction, the light-induced photocurrents are directly utilized for further calculation in the integrated analog computing chip, eliminating the need for analog-to-digital converters. ACCEL achieves a low computing latency of 72 ns for each frame and demonstrated competitive accuracy even in low-light conditions. This showcases the potential of ACCEL in applications such as wearable devices, autonomous driving, and industrial inspections. They presented experimental measurements of ACCEL’s performance in terms of energy efficiency and computational speed. ACCEL achieves a systemic computing speed of 4.55 × 10^3 TOPS (tera-operations per second) and an energy efficiency of 7.48 × 10^4 TOPS W−1 (floating point operations per second per watt), which are several orders of magnitude higher than state-of-the-art methods. The measured average systemic energy consumption of ACCEL for 3-class ImageNet classification is 4.4 nJ, and the experimental systemic energy efficiency is calculated to be 7.48 × 10^4 TOPS W−1. These results highlight the energy efficiency and scalability of ACCEL, making it suitable for diverse intelligent vision tasks.